Also, note that we need to replace “ Program Files” with “ Progra~1” and “ Program Files (x86)” with “ Progra~2“.

Now, we need to set few environment variables which are required in order to set up Spark on a Windows machine. Spark installation folder Step 3- Set the environment variables tgz file.Īfter downloading the spark build, we need to unzip the zipped folder and copy the “spark-2.4.3-bin-hadoop2.7” folder to the spark installation folder, for example, C:\Spark\ (The unzipped directory is itself a zipped directory and we need to extract the innermost unzipped directory at the installation path.).

#How to install apache spark download

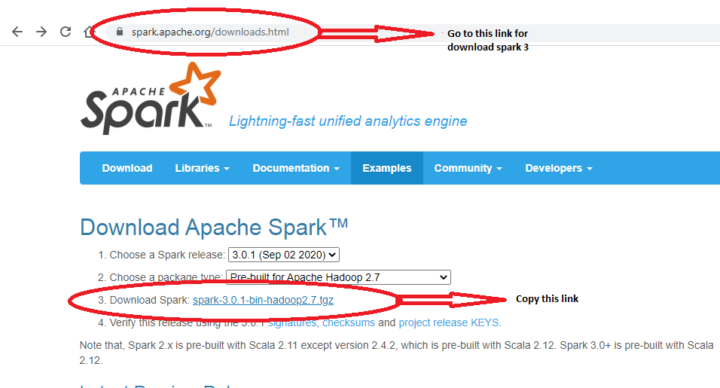

Next, click on the download “ spark-2.4.3-bin-hadoop2.7.tgz” to get the.

The default spark package type is pre-built for Apache Hadoop 2.7 and later which works fine. The latest available Spark version (at the time of writing) is Spark 2.4.3. Now we need to download Spark latest build from Apache Spark’s home page. Step 2 – Download and install Apache Spark latest version Once the file gets downloaded, double click the executable binary file to start the installation process and then follow the on-screen instructions.

#How to install apache spark 64 Bit

JDK 8 DownloadĪs highlighted, we need to download 32 bit or 64 bit JDK 8 appropriately. We can download the JDK 8 from the Oracle official website. Java JDK 8 is required as a prerequisite for the Apache Spark installation. To install Apache Spark on a local Windows machine, we need to follow below steps: Step 1 – Download and install Java JDK 8 Here, in this post, we will learn how we can install Apache Spark on a local Windows Machine in a pseudo-distributed mode (managed by Spark’s standalone cluster manager) and run it using PySpark ( Spark’s Python API). To read more on Spark Big data processing framework, visit this post “ Big Data processing using Apache Spark – Introduction“. It can run on clusters managed by Hadoop YARN, Apache Mesos, or by Spark’s standalone cluster manager itself. It is a very powerful cluster computing framework which can run from a single cluster to thousands of clusters. Apache Spark is a general-purpose big data processing engine.

0 kommentar(er)

0 kommentar(er)